This post is part of a series called "Lessons from a bad React codebase". You can read more about this series here.

Things were not off to a great start.

I had just cloned the project and I kept getting this cryptic error message when I tried to install the dependencies using yarn.

# This file contains the result of Yarn building a package (root-workspace-0b6124@workspace:.)

# Script name: postinstall

No matches found: "node_modules/@playkit-js/playkit-js-kava/src/*"Running the project returned yet another cryptic error message.

error - ./src/hooks/useKaltura.ts:18:27

Module not found: Can't resolve '@playkit-js/playkit-js-kava/src/kava'

> 18 | const { Kava } = await import("@playkit-js/playkit-js-kava/src/kava");

| ^

19 | kalturaPlayer.registerPlugin("kava", Kava);After a few hours of digging, I finally figured out what was going on. But we are getting ahead of ourselves. Let's see how we got here.

The problem

The project needed to use the Kaltura Web Player. Working with videos on the web is hard, they need to be encoded into several formats and resolutions and delivered quickly. Kaltura has a video streaming platform that took care of all this and the project relied on it for all its video needs.

Unfortunately, the Kaltura web player did not have a React library. It is an embedded player that rendered wherever its embed code was placed. It supported several types of embed codes but the most customizable one was the "Dynamic embed" where the player can be customized using Javascript.

<div id="{TARGET_ID}" style="width: 640px;height: 360px"></div>

<script type="text/javascript" src="https://cdnapisec.kaltura.com/p/{PARTNER_ID}/embedPlaykitJs/uiconf_id/{UICONF_ID}"></script>

<script type="text/javascript">

try {

var kalturaPlayer = KalturaPlayer.setup({

targetId: "{TARGET_ID}",

provider: {

partnerId: {PARTNER_ID},

uiConfId: {UICONF_ID}

},

playback: {

autoplay: true

}

});

kalturaPlayer.loadMedia({entryId: '{ENTRY_ID}'});

} catch (e) {

console.error(e.message)

}

</script>In addition to the Kaltura player, the project also needed to integrate the Kaltura video analytics plugin (Kava). The Kaltura web player supported custom plugins and this analytics plugin provided extra statistics about how each video was played. Like the player, this plugin also relied on embedded scripts.

<script type="text/javascript" src="/PATH/TO/FILE/kaltura-{ovp/tv}-player.js"></script>

<script type="text/javascript" src="/PATH/TO/FILE/playkit-kava.js"></script> <!-- Analytics plugin -->Integrating plain JS components into a React codebase can be tricky since they are not distributed as npm packages and because they function imperatively and not declaratively like React. Unfortunately, the previous devs had chosen a very poor way to integrate both the player and the plugin into the project.

The poor solution

I am often amazed at the ingenious solutions that junior devs come up with when they are unaware of simpler solutions. I can't say for certain whether that was the case here since I have not met the previous devs. But I have a hunch.

I also think that the core belief that led the previous devs astray was the belief that everything had to be done the "Modern way". Loading scripts using script elements and calling functions on a global can seem like the "2005 JS way". In the "Modern way", dependencies should be managed only using npm. In most cases, you can't use npm to manage plain JS components. But in this instance, the previous devs found a way.

You see, the Kaltura player was an npm package under the hood. It was not published to the npm registry but it was open sourced on Github. So the previous devs decided to use this package directly by leveraging yarn's support for git repos.

package.json

{

"dependencies": {

"kaltura-player-js": "git+https://github.com/kaltura/kaltura-player-js.git#v3.9.0",

}

}The package contained the scripts that were loaded by the player's embed code. These scripts were only designed to work in a browser environment. However, the project used Next.js where components ran on the server. So when the package was imported in the usual fashion, it crashed as it tried to use a browser API that was absent in a Node.js server environment. They got around this with a server/client split and a dynamic import.

// window is not defined in server environments

// So the block below ran only in the browser

if (typeof window !== "undefined") {

(async () => {

// This import resolves only when this block runs

const kalturaPlayer = await import("kaltura-player-js");

})

}With this, they had everything they needed to render the player within an element rendered by React. Now they had to integrate the analytics plugin and here they got even more creative.

The analytics plugin was also an unpublished npm package on Github. So they added it to the project just like the player package.

package.json

{

"dependencies": {

"@playkit-js/playkit-js-kava": "git+https://github.com/kaltura/playkit-js-kava.git#v1.3.1",

}

}But here they had problems. The default script exposed by the plugin's package crashed when it was imported into the project since it relied on the KalturaPlayer global that was usually set by the player script. This global was not being set in this setup and to solve this, they started digging around the plugin's source code.

Eventually, they figured out that they could register the plugin by importing its class directly and calling registerPlugin.

if (typeof window !== "undefined") {

(async () => {

const kalturaPlayer = await import("kaltura-player-js");

// Kava is not exported by the @playkit-js/playkit-js-kava package so it has to be imported directly from a source file

const { Kava } = await import("@playkit-js/playkit-js-kava/src/kava");

kalturaPlayer.registerPlugin("kava", Kava);

})

}But here they hit another problem. The plugin's source code used Flow for type checking. Flow's syntax was incompatible with Javascript or Typescript so it couldn't be imported into a Next.js project. The solution? Strip the flow types from the cloned package using flow-remove-types.

package.json

{

"scripts": {

"kava-strip-flow": "flow-remove-types node_modules/@playkit-js/playkit-js-kava/src/* -d ./",

}

}And for added convenience, strip the types automatically after the dependencies are installed using a postinstall script.

package.json

{

"scripts" : {

"postinstall": "yarn kava-strip-flow",

}

}Why did it break?

Let's get back to those cryptic error messages. Why were they occurring?

As it turns out, the project had broken due to a very obscure change in yarn. The project was originally bootstrapped using yarn v1 because newer versions didn't exist yet. Starting with v2, yarn changed the way that it handled packages from git. Instead of just cloning the package repo into node_modules, yarn now ran pack on the package after cloning it from the repo.

pack creates a compressed tar file from the package. It is usually used when a package is being published to the npm registry to create the compressed file that will be uploaded there. Why does this matter? Well, in addition to compressing the package files, pack also follows several file rules. Most notably, it followed the rules configured in a .npmignore file.

On my machine, I had set yarn to use the latest "stable" version by running yarn set version stable which had set yarn@3.6.1 as the default on my machine. If a specific version of yarn was not set in the packageManager field in package.json or in a .yarnrc.yml file, then this version was used whenever yarn was run in a project.

So to put all of these pieces together, the project broke because it didn't have a specific version of yarn concretely set within it. This led to yarn using the default version on my machine, which ran pack on all packages that were installed from git. pack followed the rules in the .npmignore file and the plugin repo had a .npmignore file set up to omit the src folder. So running pack deleted the src folder from the cloned package and broke the integration which referenced files from it.

Not gonna lie, I felt quite pleased after figuring all of this out.

Why is this a poor solution?

Here is why I think this is a poor solution.

Unsupported usage

The Kaltura web player and the analytics plugins were never intended to be used as npm packages. Their developers only supported the usage methods that they documented and these components were meant to be used as embedded scripts. These packages probably existed due to internal reasons at Kaltura and they could change or break at any time as internal requirements changed.

Many moving pieces

This solution relied on several moving pieces such as yarn's git support, postinstall scripts, flow-remove-types, dynamic imports and a client/server break. If any of these pieces broke or changed, the whole solution would fail and finding the cause would involve looking into many of these pieces.

Broken abstractions and reliance on internals

We rely on abstractions while coding. Tools and libraries provide concepts and interfaces and we can use these without thinking about what goes on under the hood. The internal implementation details of the tools and libraries are abstracted away from us and the developers of tools and libraries will only ensure that the concepts and interfaces continue to function. So any of the other internal details can change at any time.

Several abstractions are broken in this solution. The first is the abstraction of a npm package. npm packages are intended to be used through module imports where we can import the items exported by a package. By relying on this abstraction, we don't have to care about where items live within a package, we can import it and expect that it will be provided to us (e.g. import React from 'react'; or import { useEffect } from 'react';). This solution breaks this abstraction by using unexported items (the src folder). Now we have to care about the package's internal file structure and changes to it could break our code.

The second is the abstraction of Node.js's module system. With it, we can use packages mentioned in package.json using the import or require syntax. We don't need to know how the package manager (npm, yarn) works and how the package's files are stored on the filesystem. This abstraction is broken when the flow removal script works directly with the node_modules/@playkit-js/playkit-js-kava/src folder. Now we have to care about the package manager placing files specifically within that folder and changes to that could break our code. (and it did)

TLDR; This solution is hacky.

A better solution

The current solution locked us to yarn v1 since newer versions of yarn broke its workflow. I'm sure that we could have come up with another way to get the package's source files (git submodules?) but I also knew that this solution would continue to cause us more issues in the future. So I set about reworking it.

The main issue I wanted to address was this solution's reliance on unexported items from unsupported, unpublished npm packages. When working with third-party code, it is best to use it exactly as intended and since the Kaltura player and its plugins were meant to be used as embedded scripts, that's how we should use it.

In React we can render script elements just like any other HTML element. So the player's embed code can be rendered using React like so

const KalturaPlayer = ({ targetId, partnerId, uiConfId }) => (

<>

<div id={targetId} style={{ width: '640px', height: '360px' }} />

<script type="text/javascript" src={`https://cdnapisec.kaltura.com/p/${partnerId}/embedPlaykitJs/uiconf_id/${uiConfId}`} />

</>

)The player also requires some Javascript to render the player into a certain element. This can be placed in a useEffect hook which would run after the elements have been added to the DOM.

import { useEffect } from 'react';

const KalturaPlayer = ({ targetId, partnerId, uiConfId, entryId }) => {

useEffect(() => {

const kalturaPlayer = KalturaPlayer.setup({

targetId,

provider: {

partnerId,

uiConfId

},

playback: {

autoplay: true

}

});

kalturaPlayer.loadMedia({ entryId });

}, [])

return (

<>

<div id={targetId} style={{ width: '640px', height: '360px' }} />

<script type="text/javascript" src={`https://cdnapisec.kaltura.com/p/${partnerId}/embedPlaykitJs/uiconf_id/${uiConfId}`} />

</>

);

}This is all we need to render the player. If we want to expose more of the player's functionality to React, we can store the reference to the player instance in a ref and then "synchronize" React's state to the player using useEffect hooks. We should also destroy the player instance when the component dismounts to save resources.

import { useRef, useEffect } from 'react';

const KalturaPlayer = ({ targetId, partnerId, uiConfId, entryId }) => {

const playerRef = useRef(null);

useEffect(() => {

playerRef.current = KalturaPlayer.setup({

targetId,

provider: {

partnerId,

uiConfId

},

playback: {

autoplay: true

}

});

// Destroy this instance of the player when this component dismounts

return () => {

if (playerRef.current) {

playerRef.current.destroy();

}

}

}, [])

// This useEffect hook runs after the hook above

// Every time the entryId prop changes, that change will be synchronized with the player instance

useEffect(() => {

if (playerRef.current && entryId) {

playerRef.current.loadMedia({ entryId })

}

}, [entryId])

...

}The same approach works in Next.js but instead of rendering the script element directly, we need to use to the Script component from next/script. The Script component changes how script elements are injected into the page so that they work alongside Next.js's page hydration.

import { useEffect } from 'react';

import Script from "next/script";

const KalturaPlayer = ({ targetId, partnerId, uiConfId, entryId }) => {

useEffect(() => {

const kalturaPlayer = KalturaPlayer.setup({

targetId,

provider: {

partnerId,

uiConfId

},

playback: {

autoplay: true

}

});

kalturaPlayer.loadMedia({ entryId });

}, [])

return (

<>

<div id={targetId} style={{ width: '640px', height: '360px' }} />

<Script src={`https://cdnapisec.kaltura.com/p/${partnerId}/embedPlaykitJs/uiconf_id/${uiConfId}`} />

</>

);

}Now we need to tackle the analytics plugin. In plain React, the plugin script can be placed after the player's script, similar to the official instructions.

const KalturaPlayer = ({ targetId, partnerId, uiConfId }) => {

...

return (

<>

<div id={targetId} style={{ width: '640px', height: '360px' }} />

<script type="text/javascript" src={`https://cdnapisec.kaltura.com/p/${partnerId}/embedPlaykitJs/uiconf_id/${uiConfId}`} />

<script type="text/javascript" src="/js/playkit-kava.js" /> {/* Self hosted analytics plugin */}

</>

)

}However, Next.js's Script component injects elements out of order. So to achieve the same result, we need to manually control when the Script component is rendered.

import { useState } from 'react';

import Script from "next/script";

const KalturaPlayer = ({ targetId, partnerId, uiConfId }) => {

...

const [loadKava, setLoadKava] = useState(false);

const handlePlayerReady = () => {

setLoadKava(true);

}

return (

<>

<div id={targetId} style={{ width: '640px', height: '360px' }} />

<Script src={`https://cdnapisec.kaltura.com/p/${partnerId}/embedPlaykitJs/uiconf_id/${uiConfId}`} onReady={handlePlayerReady} />

{loadKava && (

<Script src="/js/playkit-kava.js" /> {/* Self hosted analytics plugin */}

)}

</>

)

}The results

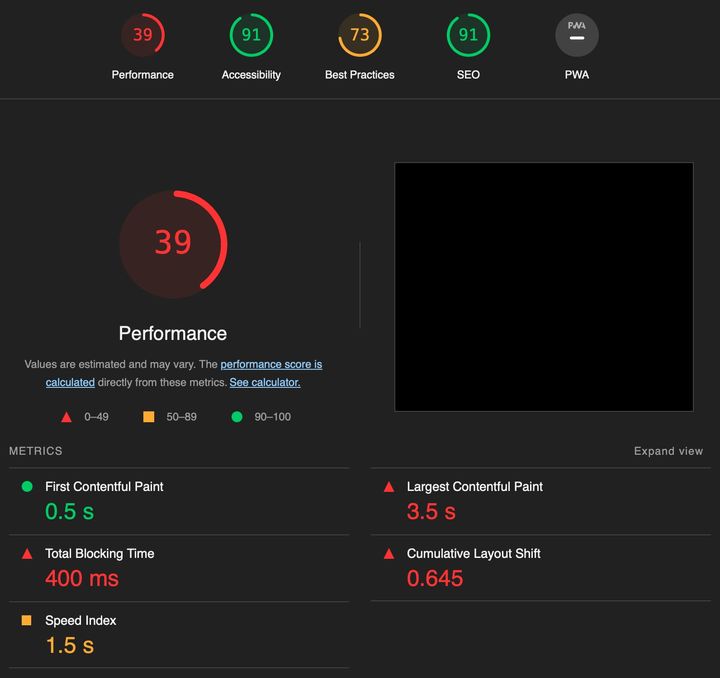

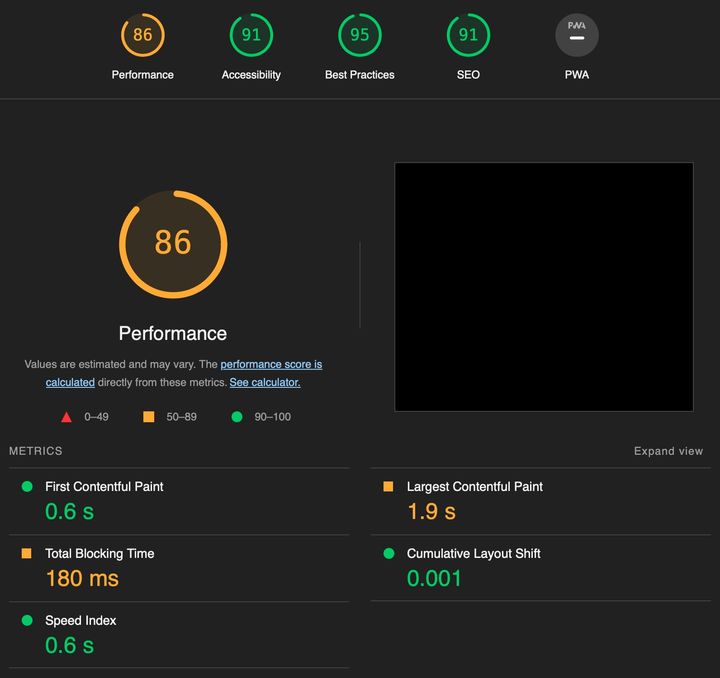

We adopted this solution during a larger rework of the video features of the site. The results were very positive.

By using the Script component, the Kaltura player and its plugin was removed from the site's JS bundle. Now they only loaded when a video is played. This greatly improved the initial load metrics of the site. (Since the rework included some other changes, some of the improvements to the metrics such as cumulative layout shift were probably due to these changes. But I think the biggest gains came from this change.)

This solution also withstood the test of time. Since it reduced the number of moving pieces and constrained all the functionality around the video player to a single component, it was much easier to maintain as our requirements for the video player changed. We also upgraded several major dependencies (Next.js, React, Typescript) and tools (Node.js) and it survived these changes without breaking a sweat.

Takeaways

Don't rely on internals

Third-party code exposes a fixed set of functionality and a way to utilize them. While you can access more functionality through some code acrobatics, it is not a great idea to do so. These unexposed internals will change over time and when they do, you will have a hard time.

Not everything needs the "React way" or the "Modern way"

A common misconception, especially amongst fresh developers, is the belief that everything needs to be done in the "React way" or in the "Modern way". They will then go to unreasonable lengths to stay within these paradigms.

Each paradigm has its pros and cons. While you should stick to a paradigm for the sake of consistency, there are a few cases where you can achieve big gains by opting out of it.

Consider many solutions

There are always many solutions to a certain problem. I think all of us are guilty of charging ahead with the first solution that pops into our heads. But a few extra minutes considering multiple solutions can save hours in the future.